Introduction

We present several Q&As about deep Q-Leaning discussed by Fu et al., 2019. In particular, we try to address the following questions:

- What’s the effect of function approximation on convergence?

- What’s the effect of sampling error and overfitting?

- What’s the effect of the target \(Q\) network and data distribution mismatch?

- What’s the best data distribution for \(Q\) learning?

Function Approximation

It is known that function approximation, bootstrapping, and off-policy training comprise the deadly triad(Sutton&Barto 2018). Fu et al. 2019 studied the effect of function approximation on convergence, and found suprisingly that when the representational capacity of the function approximation is high, function approximation error is not the major problem in \(Q\)-learning algorithms. This makes sense in light of the theory: a high-capacity function approximator can perform a nearly perfect projection of the backed up \(Q\)-function, thus mitigating potentially convergence issues due to an imperfect \(\ell^2\) norm projection(Recall that the Bellman operator is a \(\gamma\)-contraction in \(\ell^\infty\) norm, i.e., infinity norm). Furthermore, when the target is bootstrapped, we must be able to represent all \(Q\)-function along the path to the solution, and not just the final result (Bertsekas & Tsitsiklis, 1996). This observation implies that using large architectures is crucial not only because they have capacity to represent a better solution, but also because they are significantly easier to train using bootstrapping, and suffer less from nonconvergence issues.

Sampling Error and Overfitting

As the Bellman error is estimated by a batch of samples, sampling error introduced by specific sampling strategy can potentially lead to overfitting. This can be further exacerbated by increasing the network update frequency. An interesting observation from their experiments in overfitting is that, despite bias due to distribution mismatch, training \(Q\) networks with data from the replay buffer results in the lowest on-policy validation loss compared to those trained with on-policy data.

We can mitigate overfitting using replay buffer and early stopping. On the other hand, common regularization techniques in supervised learning that reduce the capacity, e.g, l2 regularization, may fall out of favor as the harm from the function approximation bias outweighs the harm from increasing overfitting with large models.

Non-Stationarity

Nonstationarity occurs in two places: in the changing target values \(\mathcal TQ\) and in a changing data distribution \(\mu\)(“distribution shift”). Surprisingly, Fu et al. found in experiments that nonstationarities in both distributions and target values do not cause significant stability issue. On the other hand, they believed that a slowly moving average target may have benefits under heavily approximation error, which suggests an adaptive update scheme for target network: slow the target updates when the network is weak, and increase the target update rate at the convergence.

In the above lecture, Hasselt also mentioned that the target network is not necessary for stability, especially when the value network is large. One hypothesis is that when the value network is large, the update of the value function may not be generalized to the next state, which helps stabilize the target value.

Distribution Shift

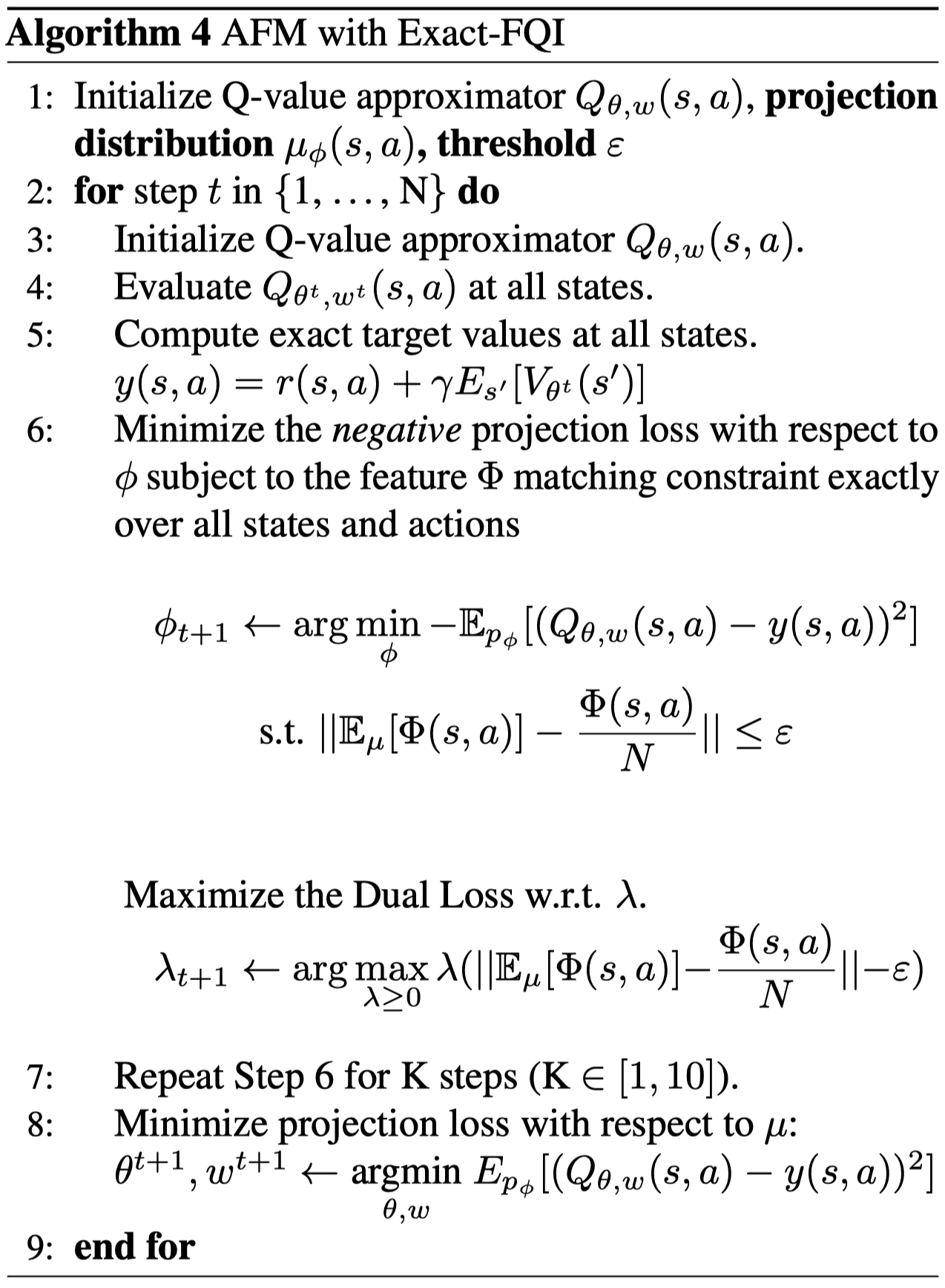

The on-policy data is not always favorable; A broader and higher-entropy data distribution that have higher state-action coverage was found to perform better, regardless of distributional shift. The authors proposed a method named Adversarial Feature Matching(AFM) that showed better experimental results than PER. Specifically, AFM optimizes the following constraint optimization problem

\[\begin{align} \underset{\theta}\min\underset{\phi}\max\mathbb E_{p_{\phi}(s,a)}&\left[\big(Q_\theta(s,a)-(r+\underset{a^\*}{\text{argmax}}Q_{\theta^-}(s',a^\*))\big)^2\right]\\\ s.t.\quad&\Vert\mathbb E_{p_\phi(s,a)}[\Phi(s)]-\mathbb E_{p_u}[\Phi(s_i)]\Vert<\epsilon \end{align}\]where \(\Phi\) is the feature vector from the last hidden layer of the \(Q\) network, \(p_u\) is a uniform sampling distribution over the replay, and \(p_\phi\) is a parameterized sampling distribution, which tries to maximize the Bellman error.

The inner constrained maximization problem aims to find a sampling distribution \(p_\phi\) such that it maximizes the Bellman error while roughly match the expected feature vector under uniform sampling from the replay buffer; the motivation is pretty similar to prioritized experience replay.

We solve the inner constrained maximization problem using dual gradient descent(Line 6 in Algorithm 4), which has been covered in our previous post. Then we plug the solution into the residual error to solve the outside minimization over \(\theta\)(Line 8). In practice, they perform 10 gradient steps for the inner problem every one gradient step of the outer problem.

References

Justin Fu, Aviral Kumar, Matthew Soh, Sergey Levine. Diagnosing Bottlenecks in Deep Q-learning Algorithms